Pandas常用操作

去重

1 | BASE_EXCG = BASE_EXCG.drop_duplicates(subset=None, keep='first', inplace=False) |

合并

1 | IDV_TD = pd.merge(IDV_TD, BASE_EXCG, left_on='CCY_CD', right_on='CCY_LETE_CD', how='left') |

删除

1 | IDV_TD.drop(['RAT_CTG','FXDI_SA_ACCM'], axis=1,inplace =True) |

运算

1 | IDV_TD = IDV_TD.eval('CRBAL_RMB = CRBAL * RMB_MID_PRIC') |

索引重置

1 | IDV_TD_after.reset_index() |

修改列名

1 | IDV_TD_after_after.rename(columns= lambda x:x if x=='CUST_NO' else IDV_TD_'+x).to_csv("./data/IDV_TD_out.csv",index = False) |

修改字段类型

1 | IDV_CUST_BASIC['OCP_CD']=IDV_CUST_BASIC['OCP_CD'].astype(str) |

填充NaN

1 | IDV_CUST_BASIC['OCP_CD'].fillna('NULL',inplace=True) |

groupby+join

1 | IDV_TD_group = IDV_TD.groupby(['CUST_NO','DATA_DAT']) |

新建一列

1 | IDV_TD_after_after['HAS_IDV_TD'] = 1 |

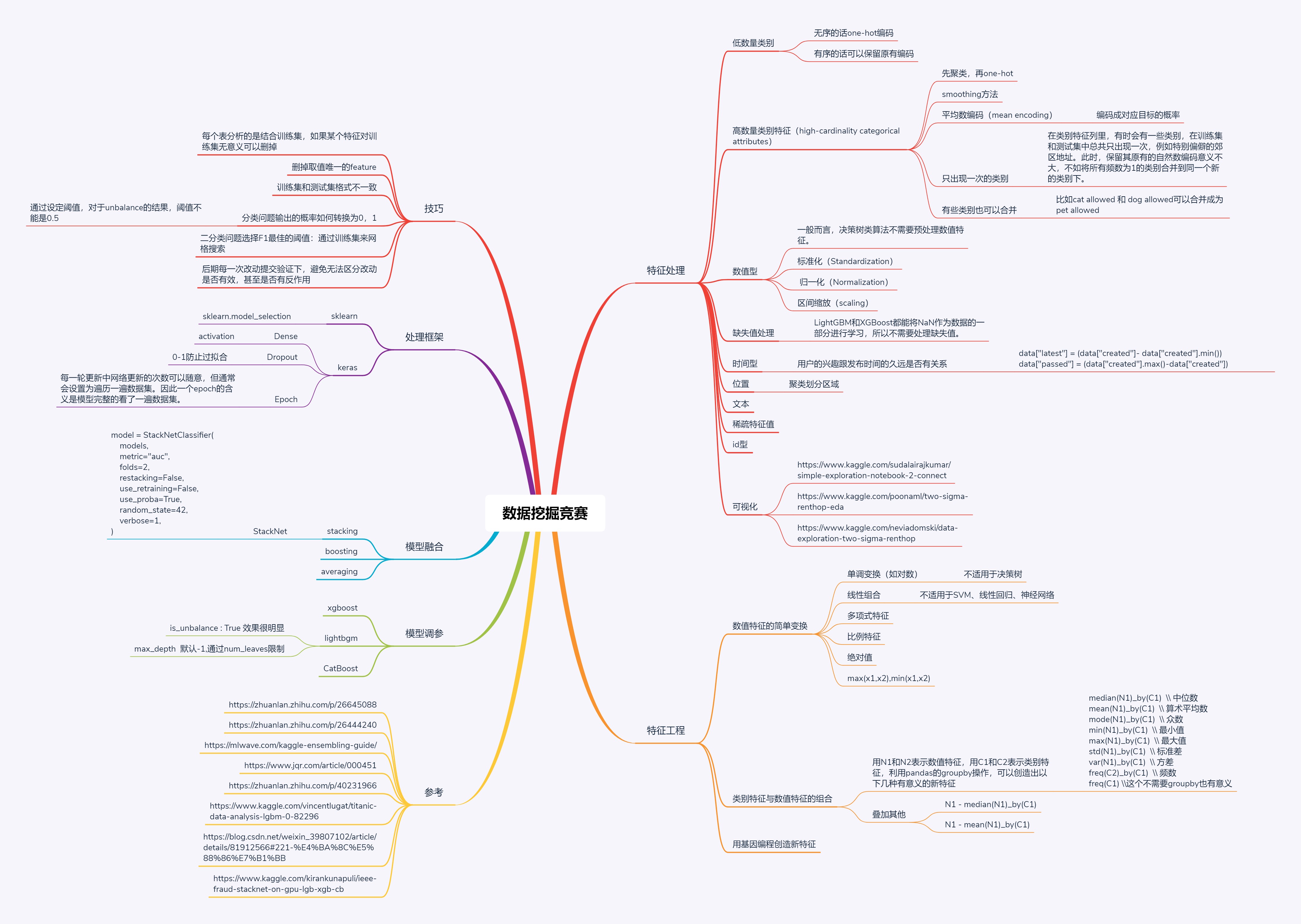

常用编码方式

One-hot

1 | #one-hot encoding |

Label

1 | #label encoding |

Mean encoding

1 | #mean encoding |

使用:

1 | #mean-encoding |

模型

训练集验证集划分

1 | from sklearn.model_selection import train_test_split |

LightGBM

1 | import lightgbm as lgb |

导出特征重要性

1 | importance = gbm.feature_importance() |

保存模型

1 | gbm.save_model('./model.txt') |

KFOLD

1 | from sklearn.model_selection import KFold |

StackNet

1 | import lightgbm as lgb |